A crawler, also known as a web crawler, spider, or bot, is an automated program used by search engines to browse and index the internet systematically. Crawlers visit web pages, follow links, and collect data to build and update search engine indexes, ensuring that relevant and up-to-date content is available for search queries.

The efficiency and effectiveness of a crawler determine how well a search engine can provide accurate and comprehensive search results. Website owners use techniques like creating sitemaps and optimizing their site’s structure to facilitate better crawling and indexing.

Example

“We updated our website’s sitemap to help search engine crawlers index our new content more efficiently.”

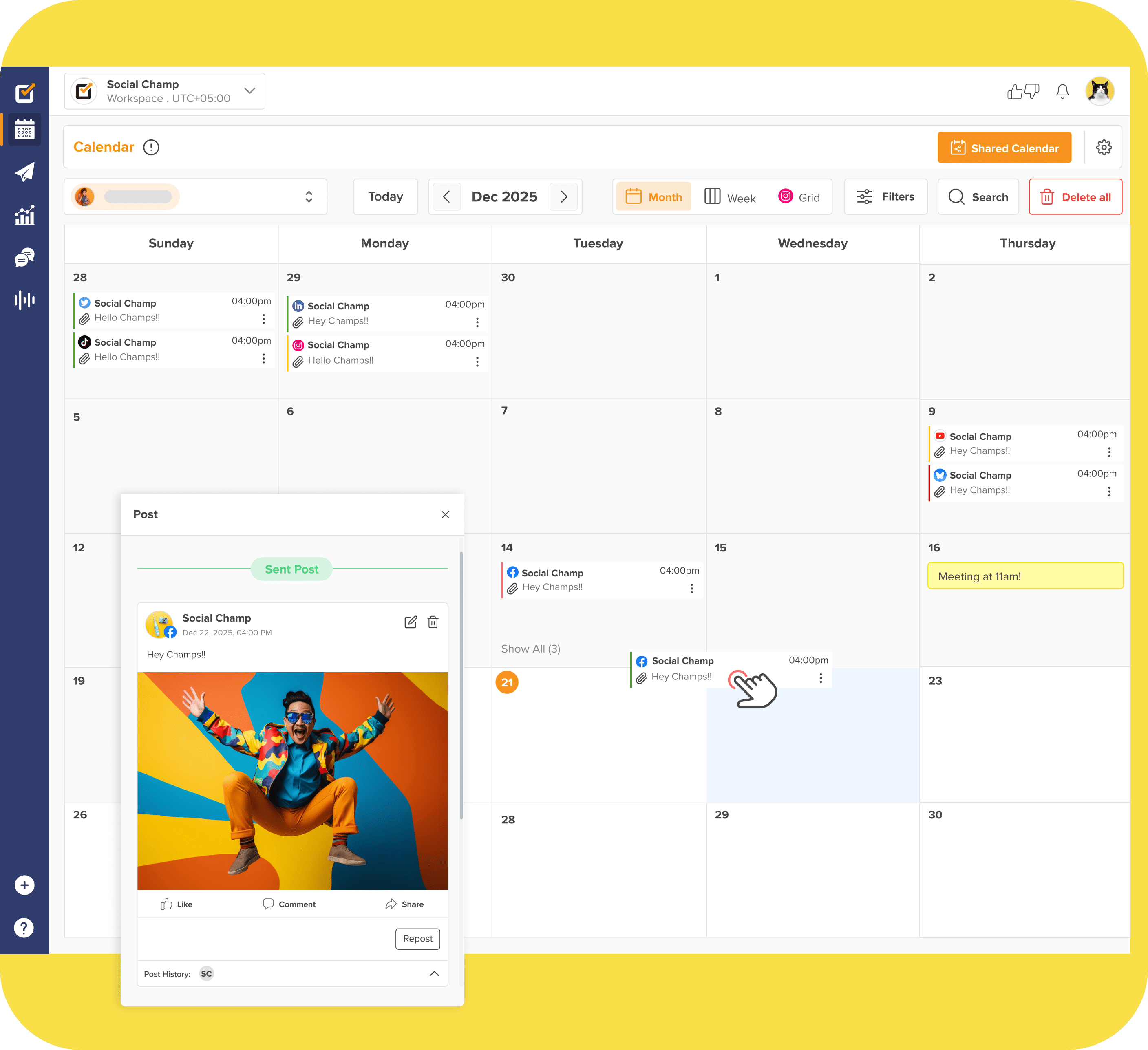

Simplify Your Social Media Strategy.

With Social Champ, effortlessly apply the best practices you’ve just learned. Schedule posts, analyze performance, and engage with your audience—all in one place.